“We put our sign to the international achievement in the year of 2015 by laying the foundations of First Humanoid Robot Factory of the World.”

PhD. Özgür AKIN

As AKINSOFT that set its targets in the first day of its establishment, I have pointed as a target the year 2015 as the date we can involve the studies we will realize in the field of robotics technologies to our daily life. For this purpose, with the experience we got from information technologies field we have been in for many years, we have started robotic R&D activities in the year of 2009 and we put our sign to the international achievement in the year of 2015 by laying the foundations of First Humanoid Robot Factory of the World.

We have never listened to those who kept saying ‘’Will you discover America again?’’ ‘’Why do you even struggle, there are already.

We said it would be 100% domestic Turk robot. We made an effort on every single part of it. We have established our factory with very small parts. Because we were not a capital company. We have never received support from anywhere. So, in a small laboratory, self-teaching, growing and expanding, we climbed the ladder step by step. Now, I would like to mention our Domestic Robot Operating System which is one of the most important steps of this ladder and we named AROS (AKINROBOTICS OPERATING SYSTEM).

As AKINROBOTICS studying on gearwheel systems, pneumatical systems, hydrolic systems, the very last system that we have been examinating is electro mechanical motors… This system can be controlled flexibly compared to other systems in terms of electronic and programming basis. Because of this characteristics, we have determined the fundamental building blocks of humanoid robotics system structure as electro mechanical motors. But this system brings some issues such as motor, reduction, encoder and motor driver need. In the first R&D process, these systems developed independently and system dynamics determined. Supplying these products as outsourced brought some restrictions and increased the costs. So the need of manufacturing these products in this country lands with domestic resources has arised. By the conclusion of our studies, we have completed R&D process of smart actuators which can be integrated with all the robotics systems as geometrically. Embedded software systems, which are the upper system required for these actuators, have been completed and the basic building blocks of our humanoid robot architecture have been completed and prepared to fulfill all orders. In this point, the need has arised for a humanoid robot operating system, which generates orders in robot’s actuators by processing the decisions (arm, hand, head, loudspeaker, user screen, eyes, etc), uses the pre-obtained data through artificial intelligence and processes all the combinations of complex data coming from all the sensors of the robot (acceleration, speed, angle, speaking, hearing).When we look at the studies around the world, the recommended system for this need is ROS, the Robot Operating System. This system only produces solutions for code developers or academic activities. So we, as AKINROBOTICS, developed a platform that enables young people, children and even a person who has no software knowledge, to be educated in this field and perform programming easily. The name of this platform is AROS and has various units inside. These units are ARGUI, ARDESIGNER, ARCORE, ARCONTROL, ARINTERFACE, and ROBOLIZA.

ARGUI (AKINROBOTICS General User Interface)

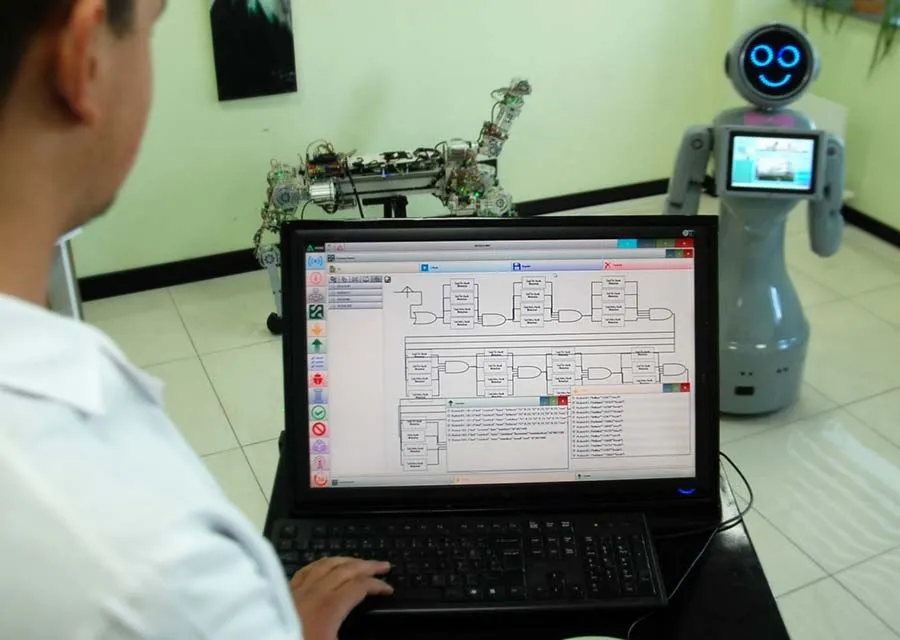

They are artificial neural networks in which many operations such as detecting all the robots connected to the system, tracking telemetry data and keeping those data in order to train the artificial intelligence, enabling the robot to deliver the instant warnings to the user and uploading the behaviours onto the robot. Thanks to the behavior circuit within ARGUI that is used for programming the robot and examinating the data, users can use the data gathered from the sensors on all over the robot, together with any logic system desired. And they can also program the commands to be given to the actuators on robot; in short, they can train the robot. In other words, they can bring the robot behaviour or habit. This programming can be linked to each other by actions planned with drag and drop method of the components and ordering them by preference of user in behaviour circuit. It enables to organize complex behaviours by accessing to all the features (acceleration, speed, angle, speaking, hearing) of the components added in behaviour circuit. These behaviours assigned to a robot can be installed on other robots picked by the user at the same time and this make it possible to get robots performing synchronized behaviours and collaborative tasks.

ARCORE (AKINROBOTICS Core Software)

It fulfills the tasks, such as processing the actions coming from ARGUI on the robot, training these behaviours and the artificial intelligence systems. After uploading behaviours with ARGUI, the robots can serve independent from ARGUI in any field they were trained for and cen develop its behaviour by analysing the related situations. It generates the required outputs by processing the sensor data (perceiving object, smell, temperature, encoder, gyroscope, etc.) received from ARCORE, ARCONTROL and ARINTERFACE with the artificial intelligence model and sends them to the actuators.

ROBOLIZA (Robotic Cloud Software)

The experiences obtained by ARCORE are gathered in Roboliza. Big data is generated with the data gathered and this data is intercrossed and processed with deep learning algorithm.

Processed data and the improvements are sent back to all the robots. With this way, the development of a robot is not limited only with its own experiences but also interpreted by combining with the other robots’ developments. In conclusion, such a fast improving deep learning system is generated. Roboliza, considering the location, enables all the robots have up-to-date algorithms and experiences at any moment.

ARCONTROL (AKINROBOTICS Control)

It enables ARCORE to access to the sub units on robot (embedded software, image processing, speaking and perception). It links the embedded software and ARCORE and enables to control and run all the parts syncronically at the same time. Image processing algorithms inside process the image in the graphics card synchronized and gives real-time results under human reaction. These perform human detection, recognition and tracking, object detection, recognition and tracking. So the robots recognize people and store the information in database in order to share with other robots. Measuring light intensity, the robot generates darkness – light notions and gives directions processing the environment’s map data with image processing algorithms. It eliminates the need for generating walkways and restricted areas on map by human, and makes it improvable in a matter of seconds. It can develop a dialog with people thanks to the speech recognition and sound synthesis algorithms. Sounds received from outer environment is primarily converted into text via speech recognition algorithms. This text is processed; notions such as order, question, subject, object, location, direction, are determined and conveyed to ARCORE. The system conveys the text processed in ARCORE to sound card via sound synthesis algorithms and enables the robot to speak.

ARDESIGNER and ARINTERFACE (AKINROBOTICS Design and Interface)

In this unit, user have access to ARDESIGNER via ARGUI. This is the system that enables the robot programmer to install the interface designed in ARDESIGNER on the robot and make it visible on ARINTERFACE. The smart devices becoming almost the essential of human life as developing technology return, have added a new one to the communication channels of people. The name of this communication channel is touch screen. The use of touch screens in phone, tablet, pc and information screens used at public spaces such as kiosk, bank, malls, has made touch screens widespread and a popular communication method lately. AKINROBOTICS, considering this evolvement, has developed these systems (ARDESIGNER and ARINTERFACE). ARDESIGNER provides an opportunity for preparation of the interface that the people and robots can communicate with each other. ARINTERFACE sends touch inputs of people to ARCORE through the interface which can be designed by the robot programmer in any way. ARINTERFACE applies by receiving the processed data coming from ARCORE. ARDESIGNER performs the preparation of this interface. ARGUI realizes the installation by programming the prepared interface with the desired behavior model. ARINTERFACE enables to show the interface to user, send touch-operated data coming from user to ARCORE and display the incoming operations.

This systems we developed for the robots we produce as AKINROBOTIC, Local Robotic Brand of our Country, allows fuctional and ergonomic use to the end-user. Through this system that has been generated, users can program the projects they want in a short time.

They said ‘’You can’t, you are in Turkey, you were born there, and so on.’’. Leave it all. Leave your copyist, rote learning mentality; do not copy. Produce by yourself, and see what happens. Because human brain is such a thing; if it doesn’t see, it produce by itself; otherwise, it copies. Try not to see. Sometimes have your blinders on. Will you discover America again? No, you will not discover America, you will discover a technology land. You will establish the technology land Turkey. Our aim is not discovering America again. They can keep standing on their own feet. I am challenging! We will be the world’s giant in robotics technology. I have the torch; I’m passing it to you. Because this is a matter of country.

The matter is not me myself; the matter is our country, it is you.